Introduction:

A new tool called Nightshade empowers artists to safeguard their digital artwork by subtly altering pixels before uploading, disrupting AI models that scrape their creations without permission. This tool serves as a response to legal challenges faced by AI companies like OpenAI and Google for unauthorized use of artists' content.

Working Principles:

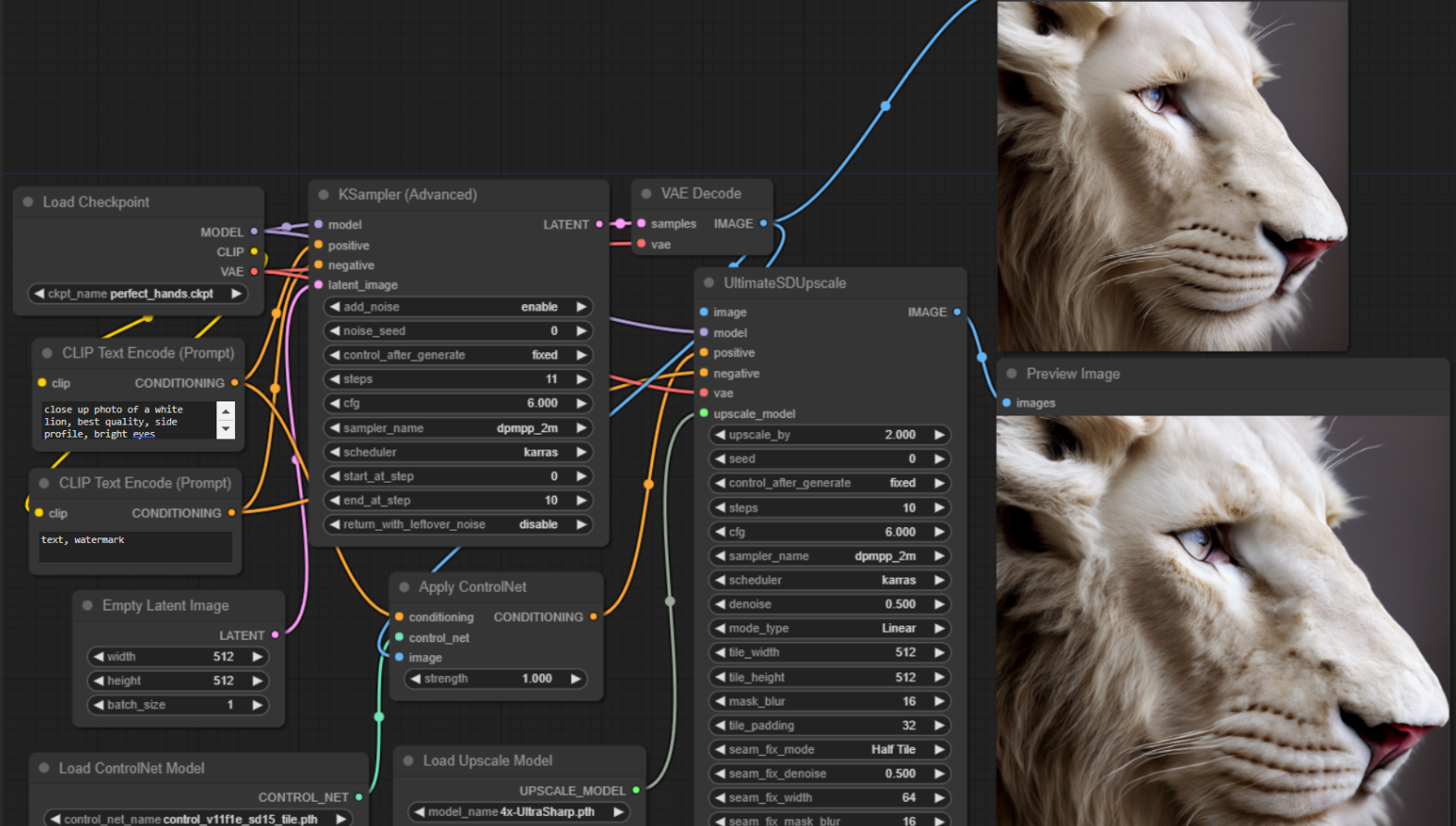

Nightshade operates by subtly altering the pixels in digital artwork before it is uploaded online, creating imperceptible changes that disrupt AI models when their training data is scraped without authorization. This tool, developed alongside Glaze, enables artists to protect their unique style and artistic integrity by introducing subtle modifications that manipulate machine-learning models' interpretation of the images without altering their visual appearance to the human eye.

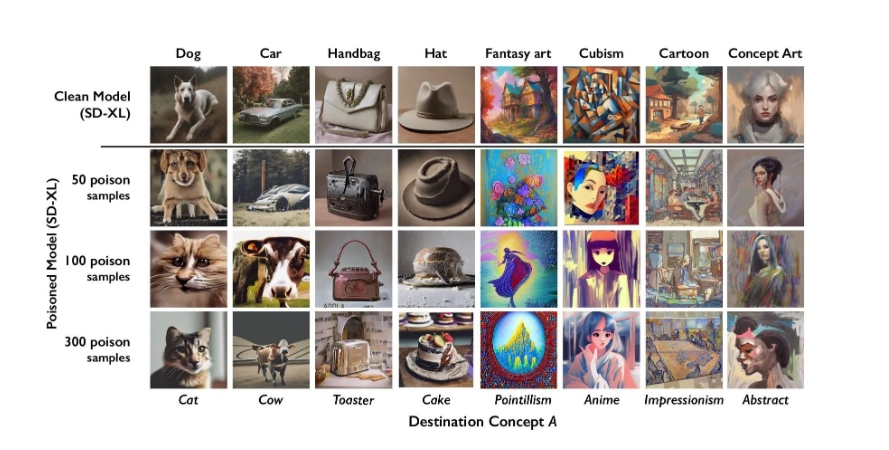

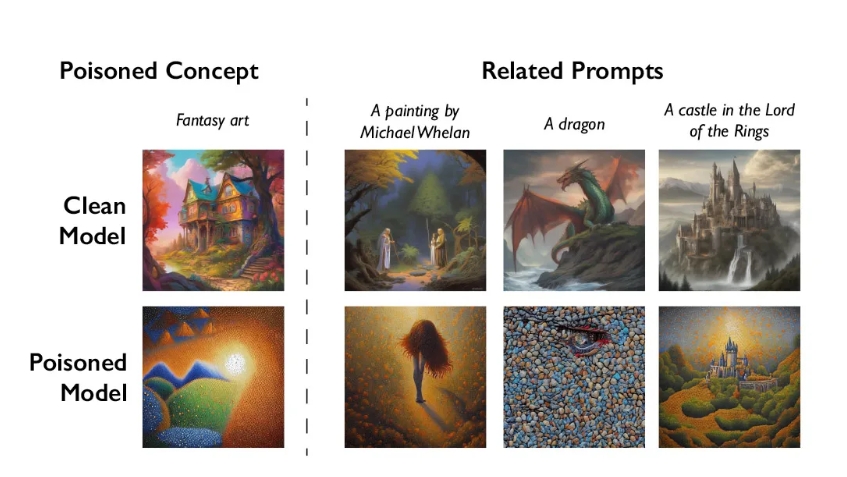

By strategically poisoning the training data used by AI models, Nightshade can cause significant disruptions in the output of these models, leading to chaotic and unpredictable results. For example, a seemingly minor alteration in the pixels of an image could prompt an AI model to misinterpret a dog as a cat or a car as a cow. This technique exploits a security vulnerability inherent in generative AI models, which are trained on vast amounts of data extracted from the internet, making them susceptible to manipulation through targeted alterations in the training data.

Future Application:

The impact of Nightshade could lead to a shift in power dynamics, prompting AI companies to respect artists' rights and potentially pay royalties for their work. Artists like Eva Toorenent and Autumn Beverly view these tools as essential for protecting their creations and regaining control over their online presence. As vulnerabilities persist in AI models, the development and adoption of tools like Nightshade are crucial in safeguarding artists' intellectual property in the digital realm.